Blog

The Limitations of Seed Data and the Importance of Real Data for Email Performance

Delivering legitimate emails to the inbox is no longer a given, and this is no secret to senders: without regularly monitoring performance and reputation, there’s a risk that email campaigns won’t achieve the desired results – whether from a commercial perspective or as a foundation for effective customer communication and retention.

For performance monitoring, data is the key element. It forms the basis for identifying potential reputation and deliverability problems at an early stage and being able to react to them. A sender without access to reliable data from their email programme is essentially flying blind.

Senders can usually access various data sources, regardless of whether they use their own infrastructure or an email service provider (ESP) for sending their emails and for performance monitoring.

However, not all of this data is equally meaningful or reliable. Only upon closer inspection does it become clear how strongly subtle details can influence the success of email campaigns:

Delivery and deliverability: a small but crucial difference with a big impact

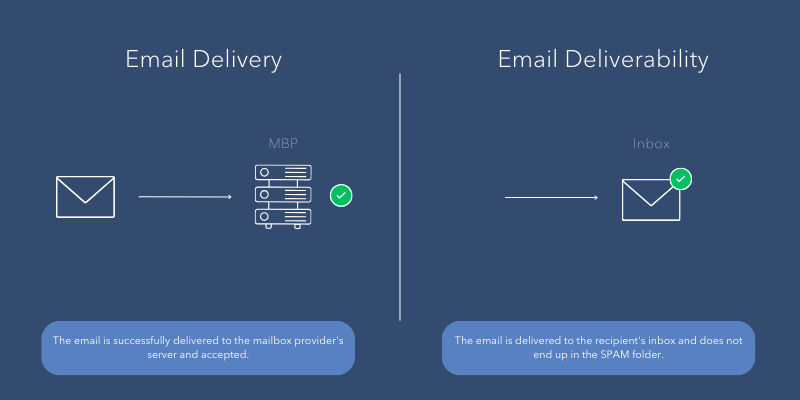

At first glance, the two terms “delivery” and “deliverability” may seem very similar, but they describe two fundamentally different success factors for email campaigns.

Campaign delivery rates, for example, are often provided by ESPs as part of their service to the sender. The sender receives a metric showing the percentage of emails that have been accepted by the mailbox providers.

But watch out – the crucial element is in the detail: An email may be accepted by the mailbox provider without ever landing in the inbox. After acceptance, the mailbox provider can still deliver the email to the spam folder. This is precisely where the major difference in performance lies: between delivery (acceptance) and deliverability (inbox). The chance that an email delivered to the spam folder will be opened by the recipient tends towards zero and thus significantly impacts the success of a campaign.

In addition to delivery rates, senders also have access to other key figures, such as open and click-through rates, unsubscribe rates or Spam Complaint Rates.

These key figures undoubtedly provide an important and solid foundation and should be continuously monitored and analysed by the sender. However, they do not provide any direct insight into deliverability and thus into delivery to the inbox.

To precisely gain this insight, so-called “seed lists” are often used in practice.

However, the crucial question here is: How reliable is seed list data, really?

What is a seed list and how does it work?

A seed list consists of a set of test email addresses set up by the seed list provider at various mailbox providers. These addresses are then integrated into the sending of email campaigns in order to obtain information about where the emails ultimately land – in the inbox, in the spam folder, or, if things don’t go as planned, neither in the inbox nor in the spam folder. The placement data received is then processed by the seed list provider and made available to the sender through a reporting tool for analysis.

This concept was quite useful in the past, when user engagement for spam filter algorithms and inbox placement played a much smaller role.

Nowadays, the results of seed lists should be viewed with caution, as they only reflect reality to a very limited extent. Mailbox providers such as Google, Yahoo or Microsoft increasingly factor in both positive and negative user interactions – like opening, reading, clicking, forwarding, Spam Complaints, deleting or simply ignoring emails – when making spam filter decisions and determining inbox placement.

However, seed email addresses are “inactive” email accounts – they don’t behave like human users. They don’t open or read emails, click on links, unsubscribe or complain. As a result, positive or negative user interactions that have such an important influence on deliverability are entirely absent from seed lists.

So, caution is advised: while seed lists can provide some insights, the reality can be quite different –something that senders should always keep in mind. Additionally, the costs incurred by using a professional seed list provider should not be overlooked.

There is another option for determining inbox placement – but this alternative approach also has its weaknesses:

Test campaigns on personal test email accounts – their limitations

As a more cost-effective alternative, senders often resort to another method – they set up their own personal test email accounts with various mailbox providers and then analyse account by account.

The sender can send their test campaigns to these personal test addresses – a kind of mini seed list – and check where the emails ultimately ended up: in the inbox or in the spam folder.

While initially this approach may appear to be reasonable, it also has significant weaknesses:

- Limited validity: A handful of test addresses cannot accurately reflect how a campaign with thousands of recipients will be handled by the respective mailbox provider.

- Volume and timing: Mailbox providers consider the sending volume and timing of the campaign in their analysis. What works well for five test addresses today can look quite different with the real campaign in two weeks’ time – for example, at Black Friday with a large recipient list.

- Reputation and user engagement: Sender reputation at the time of sending plays a major role – and this can change quickly, especially when shared IPs are used for sending. Other senders sharing the IP can influence deliverability through their campaigns and their quality. Recipients of the campaign may also complain during the course of the sending process, and it is then quite possible that the message is no longer delivered to the inbox but to the spam folder as a result of the negative user reaction to the campaign.

In short, so many factors are simply not taken into account when sending to your own test email accounts. This means that there is a risk that the test results will not correspond to reality, or only to a very limited extent.

Essential – the use of real data

Real data provided directly by Mailbox Providers, Spam Filter Companies or Security Vendors offers invaluable added value. It provides senders with first-hand feedback. It reflects actual behaviour and the real reactions of recipients, and it provides a reliable and clear insight into email performance for problem identification and optimisation.

Conclusion: Seed data alone is not a reliable foundation

Today, senders should use as many available data sources as possible (there’s no question about that), but must apply a strong focus on real data. While seed data or personal test accounts can provide initial indications, they offer only very limited insights into a campaign’s true deliverability, as crucial factors for delivery to the inbox are simply left out.

Real data, in contrast, provides a much more reliable and accurate picture of email performance.

As part of the CSA certification programme, our members receive access to comprehensive real data from our partners at the IP and domain level in the “Certification Monitor” monitoring tool.

This includes:

- Authentication Data for security purposes to identify and address potential vulnerabilities (e.g. DKIM errors, missing DKIM records, or lack of alignment)

- Insights into list hygiene through information on spam trap hits

- Spam Complaint Rates, crucial for IP and domain reputation and thus with direct impact on deliverability.

- Anomaly Detection: Deviations in inbox and complaint rates

This real data helps senders to efficiently monitor their reputation and make informed decisions to continuously improve their email programme.

If you have any questions about the CSA certification programme and the available real data, please do not hesitate to contact us.